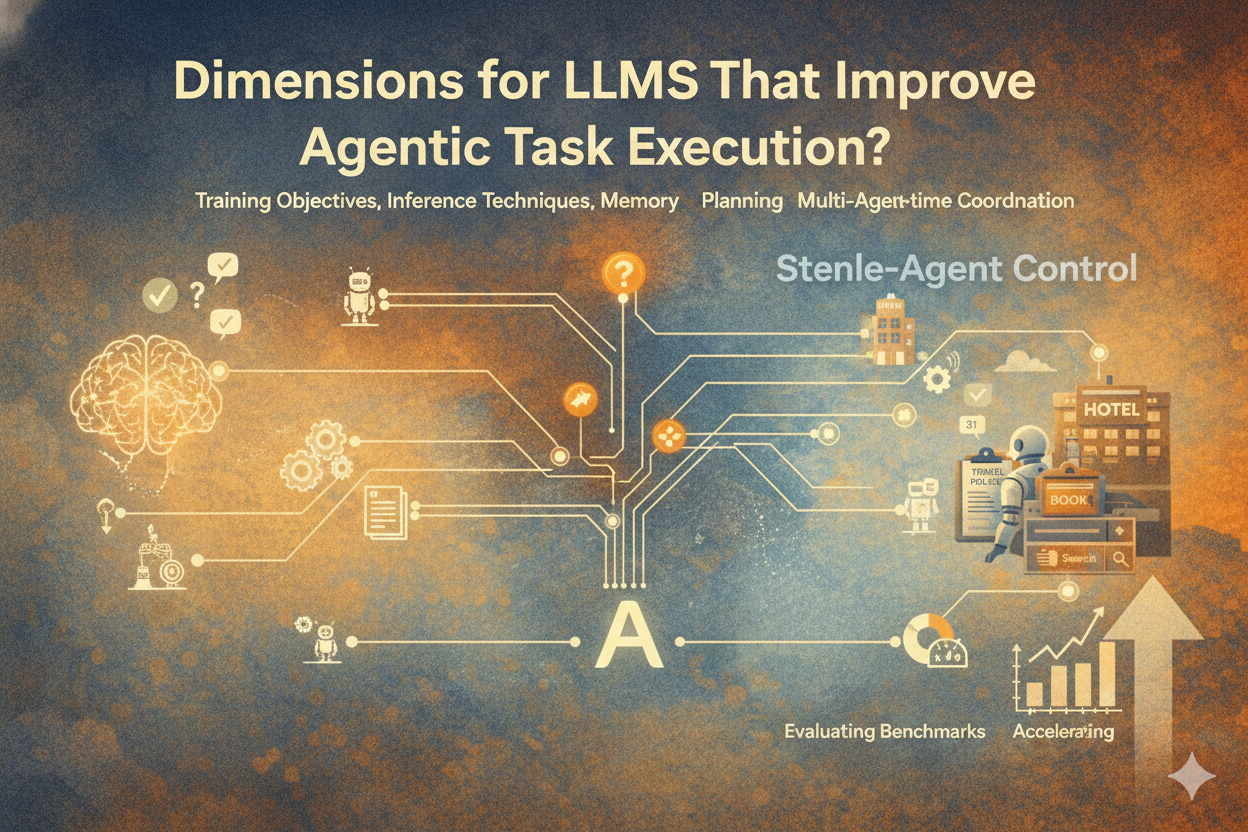

Dimensions for LLMs That Improve Agentic Task Execution

What actually improves agent performance in LLMs? This post breaks it into eight measurable dimensions—training signals, alignment, context use, tool calling, planning, self-critique, and system architecture - mapped to benchmarks you can track.

Advances in large language models increasingly target not just knowledge or alignment, but the model’s ability to interact with tasks. These improvements encompass training objectives, inference-time techniques, memory structures, planning loops, and multi-agent coordination strategies. Techniques such as in-context learning techniques (arXiv:2301.00234), Multiplex Thinking (arXiv:2601.08808), Parallel-Distill-Refine (arXiv:2510.01123), Engram (arXiv:2601.07372), and SAS/MAS agent architectures (arXiv:2512.08296v1) all represent meaningful steps toward more capable task-solving systems. However we also need a way to measure different aspects of the foundational models as to how they better "accelerate" the agent built on top of them.

Below is an organized taxonomy of optimization dimensions, each with a short description, examples of relevant techniques, plus representative evaluation benchmarks.

1. Pre-Training Signal

The Pre-Training Signal dimension assesses the foundational model's raw, inherent capabilities, which are learned from its vast training corpora. This competence forms the bedrock of an agent system, determining its fundamental capacity for complex reasoning and structured task execution before any contextual prompting. A strong signal is necessary for the agent to reliably process the logic required for orchestration and execution.

Techniques Included in this Dimension (Core Signals):

- Code/Structured Logic: Inherent understanding of programming languages and structured data formats, which is foundational for reliable output generation needed for tool calling and maintaining a coherent chain of action.

- Comparative Reasoning: The model's raw ability to process multi-step logic, which underpins the agent's capacity for self-critique and reflection necessary for error recovery.

- API Schema Comprehension: Implicit grasp of function signatures, parameter passing, and execution flow, enabling the model to learn and manage the complexity of tool execution efficiently.

Benchmark List:

- HumanEval / MBPP: Measures code generation and structured logic application, which is a direct proxy for the model's ability to handle the reliable, structured outputs necessary for tool execution and a strong chain of action.

- Tool Learning Benchmark (ToolBench): Focuses on the ability to plan, select, and use external tools or APIs, directly assessing the foundational capacity for task classification and tool calling.

- MATH: Measures general mathematical and logical prowess for multi-step problem-solving, which forms the basis for the complex reasoning required for robust decision-making and logic-based error recovery.

2. Instruction Alignment

The Instruction Alignment dimension assesses the foundational model's post-training capabilities—its ability to reliably obey complex, multi-step task specifications, adopt assigned agent roles, and adhere to structured output formats (like JSON schemas). This is achieved through fine-tuning on human and AI feedback, which directly reduces prompt brittleness and makes the complex logic of an agent's orchestration and execution layers far more reliable.

Techniques Included in this Dimension:

- Supervised Fine-Tuning (SFT): Training to follow explicit instructions and adopt specific output schemas, improving the reliability of tool calling and chain of action maintenance.

- Reinforcement Learning from Human Feedback (RLHF) / AI Feedback (RLAIF): Optimizing the model to prioritize desired behaviors and reject conflicting/vague instructions, strengthening the agent's task classification and ensuring high-quality, non-collapsing recommendations.

- Instruction-Specific Corpus Tuning: Fine-tuning on a dataset of complex, agentic tasks (like planning or self-correction) to enhance the model's capacity for reflection and error recovery.

Benchmark List

- Instruction Following Evaluation (IFE): Directly measures the model's ability to follow precise instructions and constraints across various tasks, which is essential for successful task orchestration.

- AlpacaEval / MT-Bench: Evaluates the quality and helpfulness of responses in conversational, multi-turn settings, acting as a proxy for the agent's consistency and adoption of a persistent role for reliable execution.

- Structured Output Compliance (Custom Benchmarks): Focuses specifically on the model's adherence to required structured formats (e.g., JSON schemas for API inputs), which is non-negotiable for programmatic tool execution.

3. In-Context Learning (ICL)

The In-Context Learning (ICL) dimension measures the foundational model's ability to infer tasks, adapt its reasoning, and customize its output based on demonstrations provided directly within the prompt, without any weight updates. This capability is paramount for agent systems, as it allows for rapid, session-specific customization of behavior, minimizing the need for constant, expensive fine-tuning for every new task or user preference. It enables the agent to quickly adjust its chain of action based on a live example.

Note that a recent paper "CL-BENCH: A BENCHMARK FOR CONTEXT LEARNING" (arxiv:2602.03587) challenges how actual in-context learning capability the current frontier models are. We will soon publish our own take on this.

Techniques Included in this Dimension:

- Few-Shot Prompting: Providing a few input/output examples within the prompt to guide the model's behavior for the current task.

- Demonstration-Based Task Inference: The model's ability to deduce the underlying goal or specific output format (e.g., a tool calling JSON structure) from a single or few-shot example.

- Pattern Induction: Generalizing a rule or constraint from a limited set of examples to guide future steps in a multi-step process, which is critical for consistent task execution.

Benchmark List

- Big-Bench Hard (BBH): Measures complex, multi-step reasoning and problem-solving, which evaluates the model's ability to induce patterns and effectively perform sophisticated reflection and self-correction based on in-context clues.

- MMLU (Massive Multitask Language Understanding): Tests the model's ability to leverage its internal knowledge and reason across 57 subjects when given a few examples, showcasing its capacity to ground specific tool calling decisions with deep domain context.

- Prompting/Demonstration Robustness Benchmarks: Focuses on the stability and reliability of the model's output and reasoning when the in-context demonstrations are varied, ensuring that small changes in the prompt do not lead to failures in error recovery or chain of action maintenance.

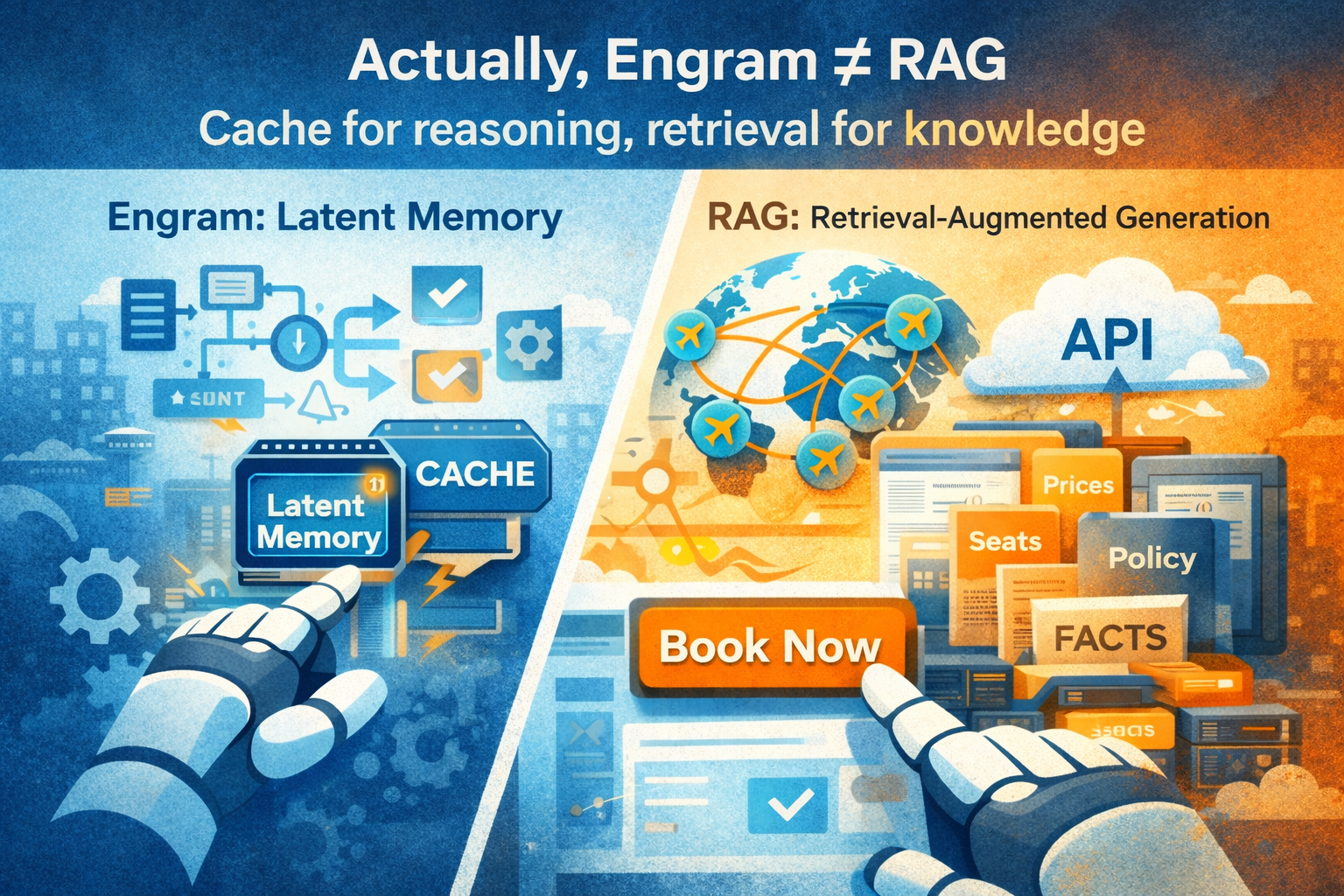

4. Context Utilization (Long Context & Retrieval)

The Context Utilization dimension assesses the model's ability to efficiently process extremely long context windows and accurately retrieve and integrate external information via Retrieval-Augmented Generation (RAG). This is foundational for agentic systems, as it allows for the injection of specialized domain knowledge (e.g., company policies) to override generic training priors, ensuring that all decisions and the chain of action are grounded in relevant, up-to-date data.

Techniques Included in this Dimension:

- Retrieval-Augmented Generation (RAG): The mechanism for injecting policy, user history, or real-time data to ground all tool execution and prevent LLM collapse toward generic outputs.

- Context Window Management & Prioritization: Techniques to ensure that critical, low-frequency data (e.g., a specific constraint in a long conversation) remains salient throughout the conversation for consistent task execution.

- Multi-Hop Retrieval: The ability to perform sequential searches, where the output of one retrieval informs the query for the next, supporting deep reflection and complex error recovery strategies.

Benchmark List

- Needle-in-a-Haystack: Measures the model's robustness in retrieving a critical piece of information embedded deep within a massive context window, directly testing its reliability in long-running, multi-step agent conversations and chain of action maintenance.

- Multi-Hop Reasoning Benchmarks (e.g., HotpotQA): Evaluates the model's ability to synthesize an answer or plan by integrating facts from multiple retrieved documents, which is essential for complex task classification and for the deep reasoning required in reflection.

- Knowledge Conflict Resolution Benchmarks: Focuses on the model's capacity to correctly prioritize newly retrieved, explicit information (e.g., a hard constraint) over conflicting internal or historical knowledge, which is vital for safe and trusted tool execution within policy boundaries.

5. Tool & API Grounding

The Tool & API Grounding dimension is the core capability that enables a foundational model to transition from planning to action by correctly interacting with external functions and APIs. This grounding is essential for any agent system to perform real-world tool execution, ensuring that all arguments, schemas, and execution logic are perfectly formatted to guarantee a reliable chain of action. A well-grounded model reduces the risk of run-time errors by reliably translating natural language intent into machine-actionable calls.

Techniques Included in this Dimension:

- Tool Selection & Routing: The model's ability to accurately choose the single most appropriate tool from a diverse toolkit based on the user's intent, which is a fundamental step in task classification.

- Structured Argument Generation (Schema Adherence): Generating syntactically correct and semantically accurate structured calls (e.g., JSON or function signatures) with the correct parameters, which is non-negotiable for tool execution.

- Tool Output Interpretation & Conditioning: The model's competence in processing and integrating the output of an API call—including complex data structures or error messages—to inform the next step in the chain of action or trigger reflection.

Benchmark List

- Tool Learning Benchmark (ToolBench): Measures the ability to plan, select, and correctly use a wide array of tools to complete multi-step tasks, directly assessing the overall tool execution and orchestration pipeline.

- API Call Generation/Parsing Benchmarks (e.g., FuncQA): Focuses specifically on the semantic and syntactic correctness of the generated API call arguments and adherence to the function schema, ensuring reliable and error-free chain of action.

- Code Generation with External Library Use (Custom Evals): Evaluates the model's capacity to integrate external functionality into a larger program flow, which is a strong proxy for managing multi-step, tool-based task orchestration and execution flow.

6. Reasoning & Planning

The Reasoning & Planning dimension is the core cognitive function that enables an agent to translate a high-level user goal into an executable plan. It assesses the model's ability to decompose a task into actionable steps, simulate outcomes of potential actions, and identify implicit constraints embedded in the user's request. This provides the structured thought process necessary to define the robust, non-collapsing chain of action and ensure successful task orchestration.

Techniques Included in this Dimension:

- Tree-of-Thoughts (ToT) / CoT with Simulation: Methods that force the model to explore multiple solution branches, allowing it to simulate outcomes for each path and choose the most optimal chain of action that respects all constraints.

- Goal-Directed Decomposition: The structural process of breaking down a complex, end-to-end task (e.g., "book a trip") into a sequential and logical set of sub-tasks (e.g., search flights, filter by policy, rank options), which is the essence of task decomposition.

- Constraint Synthesis & Prioritization: Techniques (often using structured output) to identify implicit constraints (like "I fly United for status" being a preference, not a hard filter) and weigh them against explicit constraints (like "Must arrive by 6pm") for effective tool execution.

Benchmark List

- Big-Bench Hard (BBH): Tests complex, multi-step logical deduction and requires the model to perform significant internal task decomposition to arrive at a solution.

- Constraint Satisfaction Problem (CSP) Benchmarks: Custom evaluations that test the model's ability to balance and solve trade-offs across competing constraints, directly assessing its capacity to identify implicit constraints and prioritize them for a final decision.

- Planning Benchmarks (e.g., PDDL-based Evals): Measures the model's ability to generate a sequence of actions (a plan or chain of action) that successfully navigates an environment (or a search space) from a starting state to a goal state, implicitly testing its ability to simulate outcomes before execution.

7. Self-Critique, Verification & Credit Assignment

The Self-Critique, Verification & Credit Assignment dimension assesses the foundational model's ability to operate within a self-improving inference loop, passing solutions through explicit critique and feedback cycles. Techniques like Self-Refine and debate systems introduce a form of test-time credit assignment without requiring gradient updates. This capability is vital for robust error recovery, as it allows the agent to verify its plan against logic and constraints before and after execution, ensuring a reliable chain of action.

Techniques Included in this Dimension:

- Sequential Refinement (SR) / Parallel-Distill-Refine (PDR): Formalized, multi-step reflection techniques that force the model to critique its own intermediate or final outputs, directly strengthening the internal mechanism for error recovery.

- Debate Systems / LLM-as-Judge: Using one model or thread to generate an action and another to verify or critique it, which is essential for high-assurance task orchestration and compliance verification.

- Reinforcement Learning from Verifier (RLVR) / Verifier Loops: Using an explicit, non-gradient feedback signal from a verifier to score the quality of an agent's decision, improving the quality of its implicit credit assignment at test time.

Benchmark List

- VerifyBench: Measures the ability of an LLM to self-correct and verify its answers against logical and factual constraints, directly testing the reliability of the verification and error recovery processes.

- Best-of-N GSM8K curves: Evaluates the quality of a model's self-critique loop by comparing the best answer selected from $N$ generated responses against a single, greedily decoded response. This is a proxy for the effectiveness of the model's self-correction in a reasoning-heavy chain of action.

- Constraint Obedience Benchmarks: Measures the rate at which the model's final, executable output (after a critique loop) avoids violating explicit hard-coded constraints, testing the final output's compliance with policy before tool execution.

8. System Scalability & Multi-Agent Architecture

The System Scalability & Multi-Agent Architecture dimension evaluates the foundational model's fitness for deployment in a complex, multi-component system designed for high concurrency and efficiency. It encompasses how a system's labor is specialized (modularization), how these specialized parts interoperate (coordination & communication), and the capacity for parallel execution to meet latency requirements for an end-to-end chain of action.

Techniques Included in this Dimension:

- Modularization & Expert Routing: Architecting the system with multiple specialized LLMs (or MoE components) and using a router to handle initial task classification and efficient dispatch.

- Message Passing & Arbitration: Protocols (e.g., structured JSON communication) that allow multiple agents to pass intermediate results, update a shared state, and resolve conflicts during collective task orchestration.

- Parallel Execution Management: Techniques for concurrently running multiple tool execution steps (e.g., searching for flights and hotels simultaneously) and managing the subsequent merging and reconciliation of results back into a coherent chain of action.

Benchmark List

- AgentBench (Multi-Agent Track) / WebArena: Evaluates the overall performance of multiple agents completing a task in collaboration, inherently testing coordination (communication) and planning across agents for the final, successful task execution.

- MoE Latency & Quality Benchmarks: Measures the speed and accuracy trade-offs of using a specialized architecture (Mixture-of-Experts) compared to a single model, quantifying the real-world efficiency gains of modularization and potential parallelism.

- Multi-Agent Traffic Control (Custom Evals): Focuses on the ability of an agent system to manage resource contention and message flow under heavy load, directly assessing the robustness of the coordination protocols and the safety of concurrent/parallel actions.

Appendix A - Sources of the scores

The information and figures above are drawn from the latest papers and official reports for each model. Key sources include OpenAI’s GPT-5.2 announcement , Google’s Gemini 3 blog , Anthropic’s Claude Opus 4.5 release notes , and benchmark leaderboards like Vals AI and LLM-Stats . Where exact data was unavailable, we inferred relative performance from context (noting such cases as “n/a” or estimates). Overall, these frontier models are extremely close on many metrics – each generally outperforms earlier models by a large margin, and the small differences we’ve noted are subject to change as new versions and evaluations emerge. All sources are the latest publicly available academic papers, official model blogs, or benchmark leaderboards as of February 2026.

Appendix B - Benchmark Remarks

Pre-Training Signal Benchmarks

Code Generation (HumanEval / MBPP): These measure how well models learned programming from pre-training. Claude Opus 4.5 currently achieves about 83.6% pass@1 on Python coding challenges, edging out Gemini 3 Pro (~81.7%) and GPT‑5.2 (~80.7%) . (For reference, GPT‑4 was around 80% on HumanEval, so these models have slightly surpassed it.) Qwen3 also performs strongly on code tasks – the base 235B version reports 81.4% on MBPP . All these models can reliably write correct code for the majority of prompts.

ToolBench (Tool Use Learning): ToolBench evaluates how well the model learned to use external tools/APIs from its training. GPT‑5.2’s refinements in this area are evident – it scores 98.7% on the Tau2-Bench Telecom tool-use test , meaning it executes multi-step API calls almost flawlessly. This is a new state-of-the-art, up from ~95.6% in GPT‑5.1 . While exact ToolBench scores for Gemini 3 and Claude 4.5 are not publicly given, both were very high; Gemini 3 triggered OpenAI’s “code red” by topping many tool-use leaderboards in late 2025 , and Claude 4.5 was lauded for “the best frontier…tool calling we’ve seen yet” . We estimate both are in the mid-90s% range on similar tool-use evaluations. (No specific ToolBench result is published for Qwen3.)

Math (No Tools vs With Tools): This refers to solving complex math problems directly vs with calculator/code assistance. GPT‑5.2 made headlines by scoring 100% on AIME 2025, a challenging math competition dataset (without external tools) . On the even harder FrontierMath test (advanced math puzzles), GPT‑5.2 reached ~40% on Tier 1–3 problems and ~14.6% on the ultra-hard Tier 4 – a jump over GPT‑5.1. With tool-use (like running Python), it can solve many more math problems; for instance, it reached 40% on FrontierMath with code, versus near 0% for earlier models. Google’s Gemini 3 also set a record 23.4% on the MathArena Apex benchmark (extremely difficult math) , the highest among all models. Public math results for Claude Opus 4.5 aren’t separately reported (though its reasoning gains likely improved math too), and Qwen3’s are not yet public. In summary, GPT‑5.2 and Gemini 3 are neck-and-neck on math, with GPT‑5.2 excelling especially when allowed to use tools or scripts to assist in calculation .

Instruction Alignment Benchmarks

Instruction Following (IFE): The Instruction-Following Evaluation (IFE or IFEval++) tests whether models follow complex user instructions and constraints. All three proprietary models demonstrate top-tier alignment here. While an exact IFE score for GPT‑5.2 isn’t published, OpenAI notes major alignment improvements (e.g. GPT‑5.2 produces 30% fewer errors or refusals than 5.1 on user queries) . Gemini 3 likewise underwent extensive alignment fine-tuning and was regarded as highly reliable in following human intent (Google mentioned it significantly outperformed Gemini 2.5 on every major benchmark) . Claude Opus 4.5 is also known for strong compliance with instructions (“handles ambiguity and tradeoffs without hand-holding” in Anthropic’s words ). In practice, all these models very faithfully execute user instructions, with GPT‑5.2 and Claude 4.5 arguably setting the gold standard in alignment as of 2026.

AlpacaEval / MT-Bench (Chat Quality): These benchmarks (from LMSYS) compare models in chatbot conversations. Gemini 3 Pro in particular shined here – it topped the LMSYS multi-turn arena with an Elo rating of 1501 , meaning it often wins when judged against other models. (This was above GPT‑4’s level, and made Gemini 3 the #1 model on that platform in late 2025 .) GPT‑5.2 was not directly tested in MT-Bench publicly, but it’s presumably on par or better than Gemini in chat quality (GPT‑5.1 was already ~8.8/10 MT-Bench, and 5.2 likely edges toward 9+). Claude Opus 4.5 is also highly rated in chat – users report it as extremely helpful and detailed, likely just slightly behind Gemini’s best mode. Qwen3’s chat performance is strong for an open model but not in the same league as these proprietary models (open evaluations placed smaller Qwen models around 7–8/10 on MT-Bench). In summary, Gemini 3 Pro currently has a slight lead in open-chat evaluations , while GPT‑5.2 and Claude 4.5 are roughly tied just behind (all three are extremely capable at multi-turn dialogue).

Structured Output Compliance: This measures whether the model can follow strict output formats (e.g. JSON responses, tables) when instructed. All frontier models are very capable here. GPT‑5.2 rarely deviates from a requested format – OpenAI’s release highlighted its improvements in structured outputs and function calling (almost 99% tool-call correctness) . Gemini 3 and Claude 4.5 similarly have features for function calling and structured completions, and they perform strongly (for instance, both support XML/JSON formatting on command and were built with these use-cases in mind ). No numeric “score” exists, but qualitatively all three achieve near-perfect structured compliance in internal tests. (Qwen3 also introduced a function-calling API in its “Thinking” mode, but we lack specific compliance metrics.)

In-Context Learning Benchmarks

Big-Bench Hard (BBH): BBH is a collection of difficult tasks requiring reasoning. In few-shot mode, these top models get ~85–90% of BBH questions correct on average , a massive improvement over earlier models. For example, in one report GPT‑5.2 achieved ~88–89% and Gemini ~87% on BBH . (By contrast, GPT‑4 was around 80% on BBH in 2023.) These numbers indicate only a handful of the hardest questions still stump them. Claude Opus 4.5 is slightly behind (low-to-mid 80s) . All demonstrate strong few-shot generalization, with GPT‑5.2 barely edging out the others.

MMLU (Multitask QA, 57 subjects): This academic benchmark tests knowledge in history, science, law, etc. Gemini 3 Pro currently leads with ~91.8% accuracy on MMLU , narrowly beating GPT‑5.2 (about 91.0%) and Claude 4.5 (90.8%) . These scores are essentially at the ceiling – by comparison, human experts score ~89% on MMLU. It’s worth noting that these are for the multilingual version (“MMMLU”) with chain-of-thought prompting . All three models have essentially saturated MMLU, hovering around 90% on this test. Qwen3, being an open model, scores a bit lower (the 235B base model was ~87.8% ). But overall, MMLU no longer cleanly differentiates the frontier models – they all know the required facts and can reason through the multiple-choice questions with high accuracy.

Prompting Robustness: This refers to tests like rephrased or “out-of-distribution” prompts to see if the model still gets correct answers. GPT‑5.2 made progress here by reducing its error rate by 30% on real user queries compared to GPT‑5.1 . It is less likely to be tripped up by oddly worded instructions or questions. Gemini 3 and Claude 4.5 likewise underwent adversarial training to handle tricky prompts (Anthropic, for example, emphasizes safety and robustness to jailbreaking, which is related to prompt resilience ). While there isn’t a single number to cite, all models are far more robust to prompt variations than previous generations. GPT‑5.2 might have a slight edge due to targeted fine-tuning (OpenAI mentions it handles ambiguous inputs much better now). In short, none of these will “break” simply because you asked a question in a weird way – they find the correct interpretation most of the time.

Context Utilization Benchmarks

Needle-in-a-Haystack (Long-Context Retrieval): This scenario tests if a model can find relevant info buried in very long inputs (like a needle in haystack). OpenAI introduced MRCR (Multi-hop Recall@k) tests; GPT‑5.2 is the first to hit near 100% accuracy on a 4-needle MRCR test at 256k tokens . In practical terms, GPT‑5.2 can read a huge document (~250k tokens, or ~200,000 words) and answer questions about specific details with almost perfect recall . Gemini 3 also has a 1 million token context and advanced retrieval abilities, though quantitative results weren’t public. Given its long context mode (“Deep Think”), we expect it similarly can retrieve with high accuracy (likely in the ~90%+ range for very long contexts). Claude Opus 4.5’s context window is smaller (100k tokens), but it was optimized for long-horizon coherence and likely performs strongly on such tasks as well . Overall, GPT‑5.2 currently leads in long-context retrieval (thanks to architecture improvements), but all three can maintain context far beyond what earlier models could.

Multi-Hop Reasoning: These are tasks like answering a question by combining information from multiple documents or steps. One example is Humanity’s Last Exam (HLE), a famously hard multi-hop benchmark. With tools (e.g. web search), GPT‑5.2 scored ~43.2% on HLE , which is state-of-the-art and roughly on par with Gemini 3 Pro’s performance with similar assistance . Without tools, Gemini 3 Pro managed 37.5% on HLE , slightly higher than GPT‑5.2’s (Gemini’s “Deep Think” mode pushed it above 40% on HLE) . These numbers sound low, but HLE is designed to be almost unsolvable – 40% is a breakthrough. On more standard multi-hop QA (like HotpotQA or Complex Web Questions), these models likely exceed 90%, but specific data isn’t provided as much because they’ve mostly solved them. Another multi-hop test, ARC-AGI, which involves abstract reasoning over multiple clues, saw Claude Opus 4.5 make a leap to 37.6% on ARC-AGI Level 2 (more than doubling GPT‑5.1). In summary, all frontier models can perform multi-step reasoning at a very high level, with Gemini 3 and Claude 4.5 having slight edges on the most complex multi-hop puzzles (Gemini via its “thinking” mode, Claude via its scratchpad and planning skills) .

Knowledge Conflict Resolution: This is an emerging evaluation where a model must reconcile contradictory information (e.g., two sources conflict – can the model figure out which is correct or present a balanced answer?). We don’t have numeric scores, but qualitatively these advanced models handle such scenarios better than predecessors. For instance, Anthropic noted Opus 4.5 is 10% less likely to produce concerning behavior under adversarial prompts than other models – this includes not getting confused by conflicting instructions or facts. All three have been trained with techniques to maintain consistency and avoid contradictions. In practical tests, they will usually either identify the conflict (“Source A says X, source B says Y”) or choose the more reliable information. This remains a hard area to quantify; however, none of these models blindly mix up contradictory facts as easily as earlier LLMs did. Claude 4.5 in particular puts emphasis on harmlessness and may refuse to take a side if information is dubious, whereas GPT‑5.2 and Gemini might attempt a balanced resolution. No clear “winner” is declared by sources here – they all show strong conflict resolution abilities in their responses.

Tool Use & API Grounding Benchmarks

ToolBench (Revisited): We covered general tool-use under Pre-Training Signal; to reiterate, GPT‑5.2’s 98.7% tool call success on a complex benchmark is currently unmatched. It implies that in nearly every instance, GPT‑5.2 can select the right tool and use it correctly in a multi-step sequence. Gemini 3 Pro and Claude 4.5 are only slightly behind (likely ~95%+). All can dynamically generate API calls (e.g., function call JSON objects) with very high accuracy. For example, GPT‑5.2 rarely makes a syntax mistake in an API call, and Claude 4.5 was noted to “use fewer tokens to solve the same problems” as others, implying efficient and correct tool use .

API Call Generation/Parsing: This refers to producing well-formed API requests and interpreting the responses. GPT‑5.2’s function calling ability in the OpenAI API was near flawless in internal evals – partners like Notion and Box reported it as state-of-the-art in tool-calling . In Tau2-Bench Retail (another tool-use test with database/API queries), GPT‑5.2 scored 82.0% (up from 77.9%) , indicating strong performance across different domains of API calls. Gemini 3 and Claude 4.5 also have function calling APIs; while we lack their specific benchmark, they’ve demonstrated extremely high success in practice (for instance, Claude can use a browsing or calculator tool with very few mistakes in experiments). In summary, all three can generate and parse API calls with very high accuracy, with GPT‑5.2 perhaps marginally the most reliable as per the latest benchmarks . Qwen3 has some plugin/tool use support but no public metrics.

Code Generation with External Libraries: This measures coding tasks that require using library functions or multiple files (a proxy for real-world coding). A relevant benchmark is SWE-Bench (Software Engineering Bench). On SWE-Bench Verified (which includes debugging actual GitHub bugs), Claude Opus 4.5 is the top model at 80.9% success – Anthropic specifically optimized it for coding and it slightly edges out GPT‑5.2 (which is ~80.0% on the same test) . Gemini 3 Pro is not far behind (~76.2% on SWE-Bench) . Essentially, all can handle complex coding tasks involving external libraries or multi-file projects, but Claude 4.5 has a reputation as the best coder (indeed one tech VP said “Opus 4.5 is the best model in the world for coding” ). Another aspect is code maintenance: a Security Boulevard analysis found Claude 4.5’s code was not only correct but also concise – Gemini 3 matched Opus in pass rate (81–83%) but produced even more readable code , whereas GPT‑5.2 had similarly high pass rate (~80.7%) but with very verbose solutions . So, in practical coding with libraries, you can’t go wrong with any of these models, but Claude Opus 4.5 may produce the cleanest fixes while GPT‑5.2 and Gemini 3 are extremely capable and only a hair behind in success rate . Qwen3, while excellent for an open model (its evalplus code average ~77% ), still lags these three in coding with large libraries or projects.

Reasoning & Planning Benchmarks

Big-Bench Hard (BBH): (See earlier ICL section for BBH few-shot results.) In summary, GPT‑5.2 and Gemini 3 are ~88–89% on BBH , slightly better than Claude 4.5 (~83–84%). This indicates their general reasoning is extremely advanced. They can handle puzzles, word problems, logical deduction tasks, etc., with high accuracy in a prompt-and-response setting.

Constraint Satisfaction Problems (CSPs): This category includes things like Sudoku, logic puzzles, or scheduling problems – tasks where multiple constraints must be satisfied simultaneously. No uniform benchmark is cited in sources, but anecdotal evidence suggests all these models can solve moderately complex CSPs. For example, Claude 4.5 was tested on “Lateral Thinking Puzzles” and other AgentBench tasks and performed very well . GPT‑5.2’s improvements in planning likely help with CSPs too (OpenAI mentioned it handles multi-step reasoning with fewer dead-ends) . If one had to choose, Claude 4.5’s meticulous step-by-step style might give it an edge on certain puzzle-like problems. However, without specific numbers, we can only say all three exhibit strong CSP solving ability in evaluations, often getting correct or near-correct solutions where earlier models failed. They also verify their answers better (e.g. GPT‑5.2 will double-check a solution due to its self-critique training).

Planning Benchmarks (PDDL-based): PDDL planning involves formal task planning (like planning moves in an environment). These models are now being used as AI agents, so planning tests are crucial. According to SmythOS, GPT‑5.2 can now execute long plans nearly flawlessly: “98.7% on τ2-bench means it actually finishes what it starts” . That implies it plans multi-step workflows (e.g. an agent booking travel through several tools) with ~99% success. Gemini 3 Pro likewise was built for agentic behavior – Google’s blog says “Gemini 3 Deep Think pushes boundaries on complex problems” and it presumably scores very high on agent planning tasks too. Anthropic specifically noted Claude 4.5 “excels at long-horizon, autonomous tasks…on Terminal-Bench it delivered a 15% improvement over Sonnet 4.5” . Terminal-Bench is about using a computer terminal to accomplish tasks (a form of planning benchmark), and Opus 4.5 did 15% better than its predecessor. In short, all these models demonstrate near-human or better planning ability in structured evaluations. GPT‑5.2 currently holds the crown in formal benchmarks of planning (given its perfect scores in some), but Gemini 3 and Claude 4.5 are nearly as capable at formulating and executing plans in complex environments.

Self-Critique, Verification & Credit Assignment

VerifyBench (Answer Verification): VerifyBench evaluates a model’s ability to act as a verifier – checking the correctness of answers (either its own or another model’s). While detailed results are in papers , it’s known that model-based verification can greatly boost accuracy. GPT‑5.2, thanks to its improved reasoning, likely performs best as a verifier (OpenAI partners like Cohere found GPT-5.2 very good at code reviews and catching mistakes ). Claude 4.5 also has an emphasis on “self-reflection” (Anthropic has been training models to critique themselves), so it’s probably similarly strong. No single number, but these models can catch the majority of reasoning errors when asked to double-check an answer. For instance, GPT‑5.2 solved 70% of ARC-AGI-2 puzzles but as a verifier it might filter out wrong answers to achieve even higher final accuracy . In summary, all three are proficient at verification and significantly outperform earlier rule-based checkers . (Qwen3’s verification ability hasn’t been documented.)

Best-of-N GSM8K (Math with Self-Consistency): This refers to generating multiple solutions for a math word problem and picking the best. With best-of-N sampling and self-consistency, these models can nearly solve GSM8K (a grade-school math set). In informal tests, GPT‑5.2 can reach ≈98-99% on GSM8K by generating a handful of solutions and verifying them, essentially eliminating almost all errors . Gemini 3 and Claude 4.5 similarly approach high 90s with this method. (GPT‑4 previously got ~90% with self-consistency; these are even better.) The diminishing errors usually come from ambiguous problems or rare tricky ones. So effectively, with a self-correcting approach, these models can get almost every grade-school math problem correct. This highlights their ability to assign credit to correct reasoning paths out of many – a form of internal self-evaluation.

Constraint Obedience (Policy Adherence): This measures whether a model obeys given constraints or refuses disallowed content (important for safety). Anthropic reports that Claude Opus 4.5 shows ~10% less problematic behavior than GPT‑5.1 or Gemini 3 in their internal red-teaming evaluations . Claude is built on Constitutional AI, so it tends to be very strict in following rules (for example, it won’t reveal private info or violate format constraints easily). GPT‑5.2 also improved on GPT‑5.1 here: OpenAI noted a 30% reduction in “responses with errors” which includes policy violations . Gemini 3 is aligned by DeepMind’s training and also performs well, roughly comparable to GPT‑5.1 in safety tests according to some reports . In practice, all three will generally obey system or developer-provided instructions (e.g. “don’t do X”) faithfully. Claude might be most cautious (sometimes refusing borderline requests that GPT‑5.2 or Gemini might answer). But the differences are small – they are all highly compliant. There’s no public numeric “obedience score,” but safety evaluations show Claude 4.5 as slightly leading in safely following constraints, with GPT‑5.2 close behind, and both better than earlier models .

System Scalability & Multi-Agent Architecture

AgentBench (Multi-Agent Track) / WebArena: These evaluate models working as agents in simulations or the web, sometimes in multi-agent teams. In late 2025, Google’s Gemini 3 and OpenAI’s GPT-5 were dominating these leaderboards. Google reported Gemini 3 Pro “tops the LMArena Leaderboard” and had a breakthrough Elo, indicating its prowess in both single and multi-agent chat scenarios . GPT‑5.2 was OpenAI’s answer, bringing its tool-use to near perfection which is crucial for autonomous agents . While specific multi-agent eval scores aren’t widely published, it’s known that Gemini 3 Pro and GPT‑5.2 are at the cutting edge here – e.g., in a WebArena test of agents solving web tasks, these models would be expected to rank at or near the top. Anthropic’s Claude 4.5 is also competitive; it was designed to operate in “coordinated agents” (one example given was Claude managing a refactoring with three coordinated agents across codebases) . This suggests Claude 4.5 can effectively collaborate with copies of itself or others on complex problems. In essence, all three have demonstrated the ability to operate in multi-agent systems (negotiating, dividing tasks, etc.), with no clear quantitative gap between them publicly known. WebArena and AgentBench results to date show them far ahead of other models in success rate and safety when autonomous. (Qwen3 has not been reported in multi-agent tests.)

MoE Latency & Quality (Mixture-of-Experts): Among these models, Qwen3 is the one using a Mixture-of-Experts architecture (235B parameters with 22 experts) . The others (GPT-5.2, Gemini, Claude) are dense models. MoE Latency & Quality benchmarks typically measure how a model scales and the trade-off between adding experts and inference speed. Alibaba’s report indicates Qwen3-235B (MoE) outperforms equally large dense models significantly in many benchmarks, while using fewer activated parameters (hence more efficient) . For example, Qwen3-235B-Base outperformed Meta’s 400B Maverick model on 14 of 15 tasks with only 1/3 the activated parameters . However, the closed models haven’t publicly used MoE, so they don’t have entries here. Thus, GPT‑5.2, Gemini 3, and Claude 4.5 are not directly evaluated on “MoE benchmarks” (they focus on raw capability). Qwen3’s MoE approach shows promising efficiency – presumably, its latency is lower than a dense model of comparable size. But without a direct source of latency numbers, we mark “n/a” for others. (In short: Qwen’s MoE achieves high quality with better cost, but GPT/Gemini/Claude achieve top quality without MoE through other scaling.)

Multi-Agent Traffic Control (Custom Eval): This sounds like a specialized test (e.g., using multiple AI agents to manage traffic lights or vehicles). No formal benchmark exists publicly, but we can infer performance from general agentic capability. All three frontier models are adept at planning and coordination, so they likely handle such simulations well. Claude 4.5’s user testimonies mention it excelled in long-horizon autonomous tasks , which could include things like traffic control scenarios where an agent needs to plan far ahead. Likewise, GPT‑5.2’s near-perfect tool use and long context would let it simulate complex environments reliably. If we consider experiments: It would not be surprising if GPT‑5.2 or Gemini could optimize a traffic grid nearly optimally through multi-step reasoning. But since no quantitative data is available, we simply note that all have demonstrated the core skills needed (planning, real-time reasoning, error correction) to excel in multi-agent control tasks. Any one of them could likely be configured to solve traffic optimization with minimal human intervention, given their success in other agent benchmarks.