Engram - maybe a New Axis for LLMs

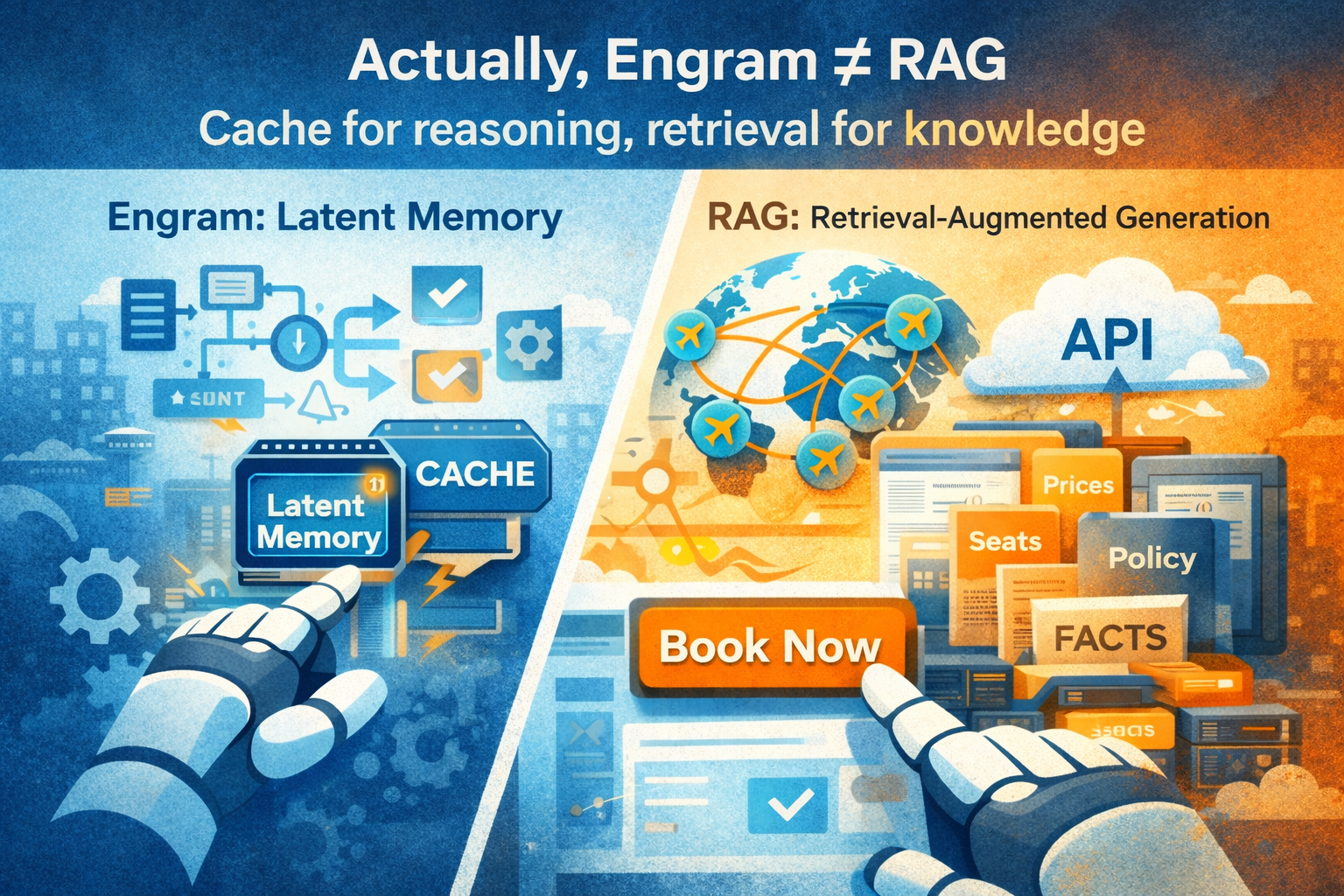

If you think Engram replaces RAG, you’re missing the point. Engram is CPU cache, RAG is disk I/O. One accelerates execution, the other expands knowledge. The future stack will need both.

Engram - maybe a New Axis for LLMs

DeepSeek’s Engram paper (arxiv: 2601.07372) is one of the most interesting LLM architectural proposals in 2026 so far. But online discussions quickly polarized: some interpreted it as “built-in RAG,” others as “RAG is obsolete now,” or as a clever compression trick for factual memory.

All three are misunderstandings.

Engram belongs to a broader architectural trend: Agentic + RL + Memory allocation, where the goal is not to make models memorize more facts, but to allow them to spend more computation on reasoning and workflows. And in this trend, Engram introduces a distinct new axis: conditional latent memory, not external symbolic memory.

Correcting Misconceptions

Misconception 1: “Engram = internal RAG”

Incorrect.

RAG is fundamentally external knowledge I/O:

- symbolic (text-level)

- user-controlled

- hot-swappable

- knowledge-expanding

Engram is fundamentally inference-path modification:

- embedding-level

- trained during pretraining

- non-editable post-hoc (today)

- compute-reallocation

- model-internal

The two live in completely different parts of the stack.

Misconception 2: “Engram means we don’t need RAG anymore”

Also incorrect.

RAG stores facts (A is B, A has C, A worked at D).

Engram stores entity patterns + lexical templates + chunking behavior.

From a storage objective:

- RAG → expands knowledge scale

- Engram → optimizes computational structure

From a systems perspective, they are complementary, not competing components.

This distinction is explicitly visible in the ablation results in the paper: when suppressing the Engram module at inference, factual knowledge tasks collapse, but reading comprehension remains mostly intact - confirming that Engram carries parametric factual/statistical mappings, not the symbolic knowledge that RAG handles.

Why Engram Matters: A New Allocation of Depth & Attention

One underappreciated result of the DeepSeek paper is that Engram reduces the burden of token reconstruction in lower layers.

Today, Transformers reconstruct entity identity like:

Wales → Princess of Wales → Diana, Princess of Wales

This consumes multiple blocks of computation to recover a static lookup.

Engram makes that lookup constant-time via hashed N-grams.

The net effect is not “more knowledge,” but:

- less compute spent reconstructing static patterns

- more depth and attention freed for reasoning

This matches the paper’s findings where Engram models outperform purely on reasoning benchmarks (BBH +5.0, ARC-Challenge +3.7, MATH +2.4) even though Engram was expected to help with knowledge tasks first.

The Right Analogy: CPU + Cache, Not CPU + Disk

Some people analogized Engram as memory. But a more accurate analogy is:

DeepSeek is adding L1/L2 cache to the Transformer compute stack.

Cache:

- accelerates execution

- reduces recomputation

- does not expand knowledge

- does not replace external storage

Similarly:

- Engram accelerates the reasoning path

- RAG expands the knowledge surface

Both co-exist.

This aligns with well-established system evolution: Intel introduced L1 cache in the 486 era and expanded to L2 in later Pentium generations - not to store more programs, but to improve instruction throughput.

A Broader Trend: Agentic + RL + Memory

Engram fits into a larger architectural trend affecting both pre-training and post-training:

- Test-time compute → SR, PDR, TreeRL, lookahead

- Agentic workflow → tool use, planning, verification

- Memory & credit assignment → latent memory, process reward, deliberative tokens

Engram sits squarely in category (3).

This trend is not trying to make the model “know more.” It is trying to make the model:

allocate compute toward reasoning, not representation reconstruction.

This is exactly the same direction currently being pushed by RL-based training for agentic workflows, and a key reason Engram improves reasoning even without fine-tune alignment.

Future Direction: RAG + Latent Memory + Test-time Compute + Tool Use

Once you view Engram correctly, the future architecture looks additive, not substitutive:

RAG + latent memory + test-time compute + tool use (collaboration)

Instead of:

“RAG vs. Engram”

We should expect:

“RAG AND Engram AND RL AND tool use AND test-time compute”

This is similar to how databases evolved into distributed systems: more axes, not fewer.

Closing Thoughts

Engram does not make RAG obsolete, and it does not turn models into external knowledge stores.

It does introduce a new axis for sparse capacity allocation that:

- reduces low-level token reconstruction,

- frees up depth and attention for reasoning,

- integrates efficiently with compute hardware,

- complements agentic workflows and RL,

- and appears to scale predictably without extra FLOPs.

It is a compelling addition to the LLM design space and one that we expect to see widely adopted in the next generation of pre-training stacks.

At Otto, we are building along this same axis. Our travel agent is RL-native and memory-aware: it does not merely surface flight and hotel options, but executes a personalized workflow end-to-end — preference modeling, multi-objective ranking, policy compliance, loyalty optimization, booking, ticketing, and post-booking service. Tool use, test-time compute, and process-level credit assignment allow the agent to refine choices, verify actions, and correct errors during execution.

The result is not a chatbot that answers questions, but an operational agent that completes jobs, adapts to user context, and frictionlessly handles changes over the lifespan of a trip. Our goal is to integrate the most advanced LLM techniques — from foundation modeling to agentic RL and memory — into Otto to deliver the best-in-class AI executive assistant for business travel.