Why BFS-Style Test-Time Search Isn’t Enough - and Why RLVR Matters for Reasoning Agents

Test-time BFS has become a popular way to boost reasoning in LLMs, but it secretly assumes a fixed and fully-known task distribution. Taleb showed why that assumption fails in real-world environments, where distributions shift and sampling fools us. RLVR updates the policy itself, so exploration becomes learned, cumulative, and cheaper over time.

Is BFS-style test-time search Enough?

There’s been growing interest in breadth-first search (BFS)-style inference techniques for LLM reasoning: Tree-of-Thought, Parallel-Distill-Refine (PDR), Self-Refine, Multiplex Thinking, etc. At a high level, these operators all avoid premature commitment by exploring multiple futures before collapsing to an answer. Because of that shared BFS pattern, a short-cut driven misconception emerges:

“If the distribution of tasks is fixed and fully known, then BFS at test time + one RL pass is more than enough.”

This intuition is attractive, but incomplete. It treats exploration as a pure search problem rather than a learning problem. To see why that breaks down, we need to distinguish between executing exploration versus learning to explore.

Below are three lenses that make this distinction clear:

1. Policy Doesn’t Improve Over Time

If we only apply BFS-style operators at test time, the underlying model policy is fixed. The agent can explore multiple tool calls, planning branches, or reasoning chains, but it gains no lasting improvement. Every new query recreates the same search tree from scratch.

That’s fine in benchmark math settings with a stationary distribution and cheap rollouts. But real agent workloads rarely satisfy those assumptions. Consider:

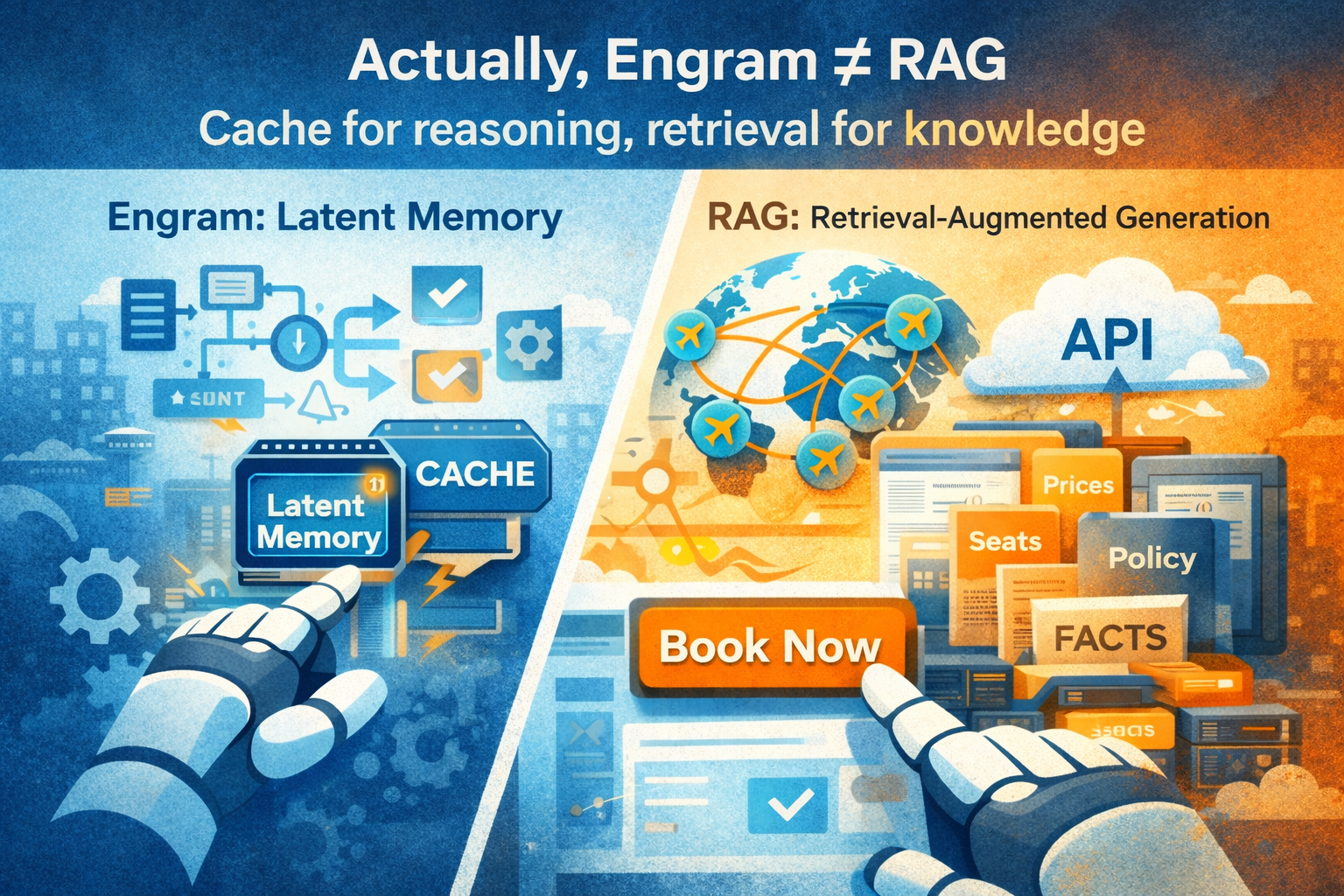

- Tool schemas change,

- Prices and inventory shift,

- APIs introduce new constraints,

- Complexity of user preferences or travel policy evolves,

- Sparse payoffs arrive late (bookings, conversions, cancellations),

- A long-horizon credit assignment is necessary.

In above regimes, a fixed policy can only repeatedly execute exploration; it cannot reduce reliance on exploration over time. BFS gives short-term search quality, but not long-term efficiency.

2. Entropy Shaping

RL aligns the exploration distribution with reward. Without RL, exploration remains uninformed: wide when it should narrow, narrow when it should widen. Reasoning operators like TreeRL, PDR, and Multiplex Thinking treat entropy as a controllable resource:

- during learning, high entropy promotes diverse rollouts,

- during deployment, entropy anneals as the policy matures.

The result is not just more exploration, but targeted exploration. Without this shaping, BFS degenerates into brute force. RLVR upgrades it into guided search under uncertainty.

3. Amortization of Exploration

The deepest distinction is amortization. BFS at test time is inherently non-amortized: exploration cost is paid fresh for every query. RLVR amortizes the cost of exploration into the policy itself. Once the model learns which branches tend to succeed, two things happen:

- Pass@1 increases (less need for branching),

- Pass@k requires smaller k (cheaper inference).

In recommendation and planning domains, this is more than a performance optimization - it’s the path from “search engine” to “solver.” Exploration stops being a per-query tax and becomes a training-time investment.

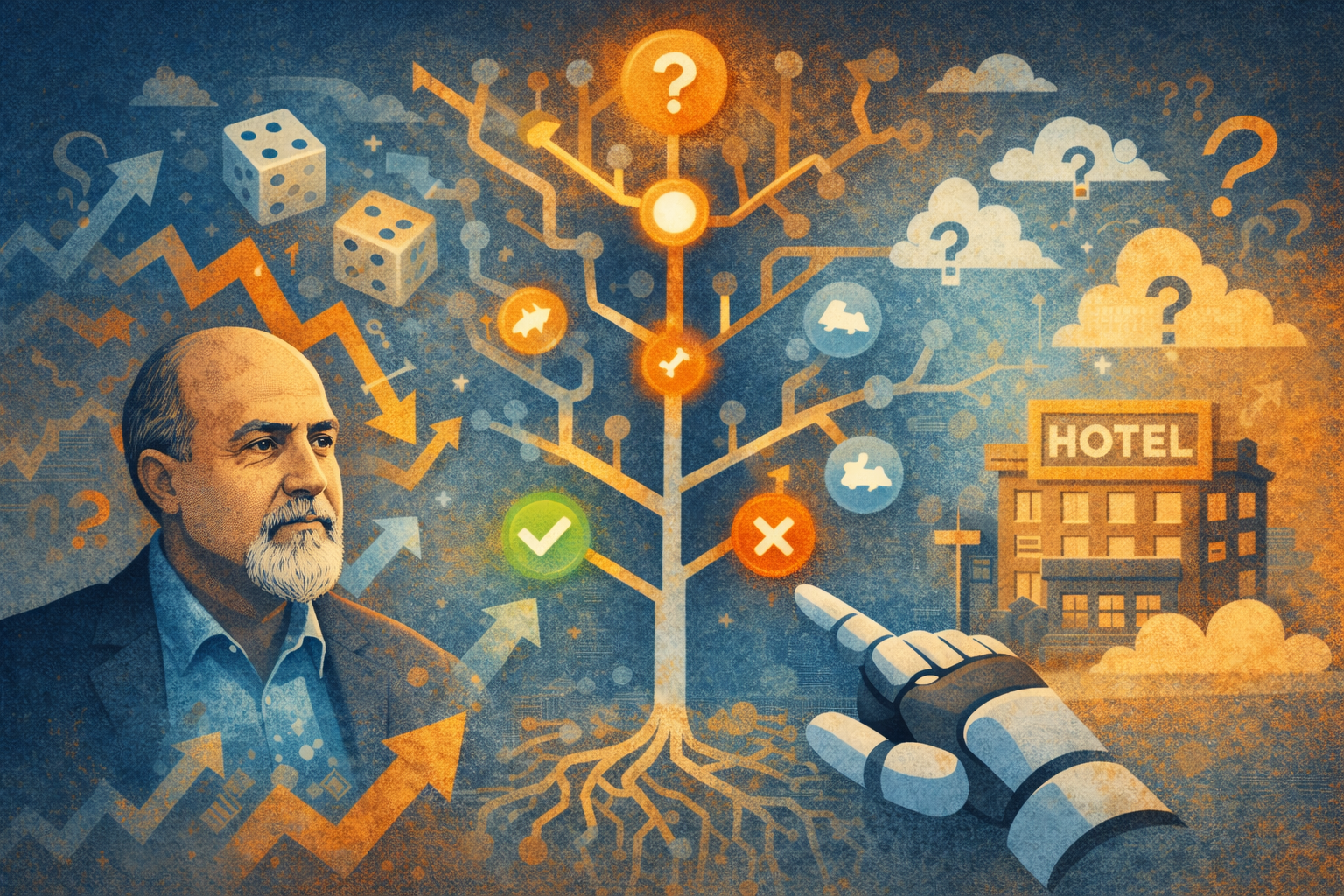

The Taleb Objection: The Distribution Is Never “Fixed and Fully Known”

The original misconception rests on a hidden assumption: that the task distribution is stationary, fully observed, and well-behaved.

Nassim Nicholas Taleb spent an entire trilogy dismantling this idea. In Fooled by Randomness he argues that humans routinely overestimate how much they understand a distribution by observing its finite samples. In The Black Swan and Antifragile he generalizes that critique:

- we do not observe the full distribution,

- the tails matter far more than the mean,

- distributions change under the very act of sampling,

- and most importantly, the distribution we think we are sampling from is often not the one we are actually in.

In Taleb’s language: assuming that a distribution is “fixed and fully known” because we’ve sampled it is a category error. Real environments are dominated by hidden variables, structural breaks, and fat-tailed events—exactly the kinds of phenomena that make static BFS insufficient and learning essential.

This maps directly to agentic LLM settings: user preferences shift, tools update, prices fluctuate, reward signals are delayed, and new tasks arise. The distribution is neither fixed nor fully known; at best it is revealed over time.

The Core Thesis

This leads to two practical conclusions that often get missed in discussions of Tree-style or Multiplex-style reasoning:

Exploration must be learned, not just executed.

and

Learned exploration amortizes cost across future queries.

This is the core value proposition of RLVR: it converts exploratory compute into persistent policy improvements. BFS alone can produce answers. RLVR builds solvers.

At Otto, this is not just abstract theory. We are building the best AI executive assistant for work travelers, a domain that is deep, constraint-heavy, and constantly shifting. Trip planning, rebooking, loyalty optimization, policy compliance, supplier inventory, and post-booking servicing all sit in Taleb’s world: the distribution is never fixed, never fully observable, and often adversarial.

To support that level of complexity, we recruit both BFS-style test-time reasoning operators and RLVR systems. The former give the agent the ability to explore and branch on a single query; the latter convert that exploration into policy improvements over time. Together they allow the traveler experience to get better week by week, month by month, without requiring the user to “prompt harder.”

This combination is what makes a true reasoning agent possible, not just a chat interface over APIs, but a system that actually learns how to navigate the domain on behalf of the traveler.